Changes with Google Webmaster

Earlier this week, Google made a significant update to their Webmaster guidelines. In the past, the original Webmaster guidelines stated that Google bots could render a site in old, text-only browsers, such as Lynx. Those kind of outdated browsers were unable to render advanced web designs and images. Google announced that in order to have optimal indexing and rendering on websites, webmasters must allow bots to access CSS, Javascript, and other image files found throughout the site. In fact, Google also stated that disallowing robot.txt to crawl on the Javascript or CSS files will directly and negatively impact how their algorithms render your website and how your site pages are indexed and ranked.

More Efficient Search Bots

Now instead of viewing and indexing the text exclusively, the bots can crawl the site like web browsers. Doing so allows them to interpret Javascript, CSS, and images along with the text content found on each page of the website. Now, Google is indexing based on page rendering, which means that text-only browsers are simply not an accurate or up-to-date form of indexing. This update makes for a more modern web browser that creates optimal indexing.

Tips for Optimized Indexing

To help with these changes, Google has offered some tips to get the most out of the new indexing system:

As you allow Google bot to access Javascript and CSS files, you need to make sure that your server can handle the additional rendering load.

Follow the practices of Google Developer’s page performance optimization. Making sure that pages can be rendered quickly will make it easier for users to access your content and also make it easier to index your pages. You can do so by eliminating unnecessary downloads, merging separate Javascript and CSS files, and setting up your server to serve them as compressed files.

Use only the most common systems and technologies on your server to ensure that everything operates smoothly between different web browsers.

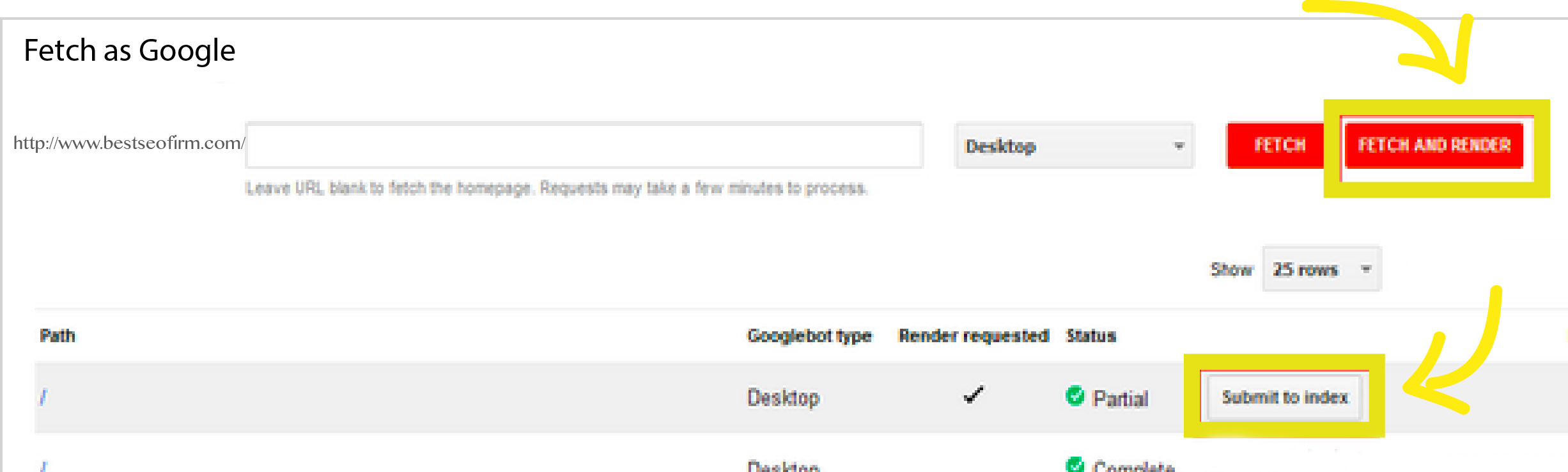

Use the updated Fetch and Render as Google feature in webmaster tools to see how their system renders your pages. With it, you’ll be able to identify indexing issues.

How to Test for Optimized Indexing Using Fetch As Google

In order to check if CSS and Javascript is being allowed on your website, you can use the Fetch and Render as Google in the webmaster tools. We’ll walk you through the steps below:

Select “Fetch” under the Crawl tools, and enter your webpage URL. (If you want to render your homepage, just leave it blank). Then press “Fetch and Render,” and Google bots will begin to crawl in the webpage.

Click “Submit to Index” once the crawling is complete. Results will appear, each one labeled as “complete, “partial,” “unreachable,” or another term according to the test. (You can find more descriptions and details of Fetch as Google diagnostics here.)

Click on the results to check the blocked scripts and files. Doing so will help you see what is intentionally and unintentionally blocked by you and your server. From there, you can follow tips listed above for optimized indexing on all of your necessary web pages.

photo by Roland Molnar via Flickr.

Comments

Comments are closed.

Tags: robot.txt tips, set up robot.txt